Preface

Get a preliminary understanding of Compute Shader and implement some simple effects. All the codes are in:

https://github.com/Remyuu/Unity-Compute-Shader-Learngithub.com/Remyuu/Unity-Compute-Shader-Learn

The main branch is the initial code. You can download the complete project and follow me. PS: I have opened a separate branch for each version of the code.

This article learns how to use Compute Shader to make:

- Post-processing effects

- Particle System

The previous article did not mention the GPU architecture because I felt that it would be difficult to understand if I explained a bunch of terms right at the beginning. With the experience of actually writing Compute Shader, you can connect the abstract concepts with the actual code.

CUDA on GPUExecution ProgramIt can be explained by a three-tier architecture:

- Grid – corresponds to a Kernel

- |-Block – A Grid has multiple Blocks, executing the same program

- | |-Thread – The most basic computing unit on the GPU

Thread is the most basic unit of GPU, and there will naturally be information exchange between different threads. In order to effectively support the operation of a large number of parallel threads and solve the data exchange requirements between these threads, the memory is designed into multiple levels.Storage AngleIt can also be divided into three layers:

- Per-Thread memory – Within a Thread, the transmission cycle is one clock cycle (less than 1 nanosecond), which can be hundreds of times faster than global memory.

- Shared memory – Between blocks, the speed is much faster than the global speed.

- Global memory – between all threads, but the slowest, usually the bottleneck of the GPU. The Volta architecture uses HBM2 as the global memory of the device, while Turing uses GDDR6.

If the memory size limit is exceeded, it will be pushed to larger but slower storage space.

Shared Memory and L1 cache share the same physical space, but they are functionally different: the former needs to be managed manually, while the latter is automatically managed by hardware. My understanding is that Shared Memory is functionally similar to a programmable L1 cache.

In NVIDIA's CUDA architecture,Streaming Multiprocessor (SM)It is a processing unit on the GPU that is responsible for executing theBlocksThreads in .Stream Processors, also known as "CUDA cores", are processing elements within the SM, and each stream processor can process multiple threads in parallel. In general:

- GPU -> Multi-Processors (SMs) -> Stream Processors

That is, the GPU contains multiple SMs (multiprocessors), each of which contains multiple stream processors. Each stream processor is responsible for executing the computing instructions of one or more threads.

In GPU,ThreadIt is the smallest unit for performing calculations.Warp (latitude)It is the basic execution unit in CUDA.

In NVIDIA's CUDA architecture, eachWarpUsually contains 32Threads(AMD has 64).BlockA thread group contains multiple threads.BlockCan contain multipleWarp.Kernelis a function executed on the GPU. You can think of it as a specific piece of code that is executed in parallel by all activated threads. In general:

- Kernel -> Grid -> Blocks -> Warps -> Threads

But in daily development, it is usually necessary to executeThreadsFar more than 32.

In order to solve the mismatch between software requirements and hardware architecture, the GPU adopts a strategy: grouping threads belonging to the same block. This grouping is called a "Warp", and each Warp contains a fixed number of threads. When the number of threads that need to be executed exceeds the number that a Warp can contain, the GPU will schedule additional Warps. The principle of doing this is to ensure that no thread is missed, even if it means starting more Warps.

For example, if a block has 128 threads, and my graphics card is wearing a leather jacket (Nvidia has 32 threads per warp), then a block will have 128/32=4 warps. To give an extreme example, if there are 129 threads, then 5 warps will be opened. There are 31 thread positions that will be directly idle! Therefore, when we write a compute shader, the a in [numthreads(a,b,c)]bc should preferably be a multiple of 32 to reduce the waste of CUDA cores.

You must be confused after reading this. I drew a picture based on my personal understanding. Please point out any mistakes.

L3 post-processing effects

The current build is based on the BIRP pipeline, and the SRP pipeline only requires a few code changes.

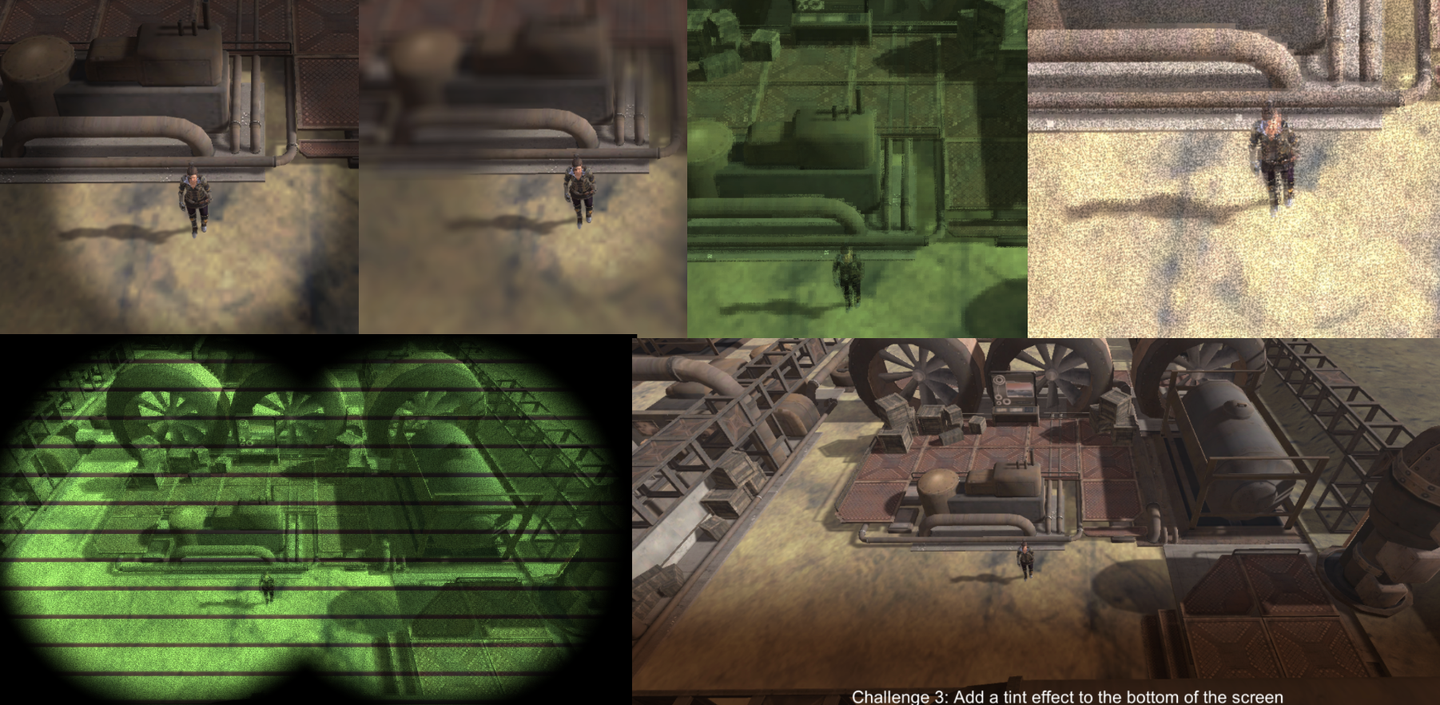

The key to this chapter is to build an abstract base class to manage the resources required by Compute Shader (Section 1). Then, based on this abstract base class, write some simple post-processing effects, such as Gaussian blur, grayscale effect, low-resolution pixel effect, and night vision effect. A brief summary of the knowledge points in this chapter:

- Get and process the Camera's rendering texture

- ExecuteInEditMode Keywords

- SystemInfo.supportsComputeShaders Checks whether the system supports

- Use of Graphics.Blit() function (the whole process is Bit Block Transfer)

- Using smoothstep() to create various effects

- Data transmission between multiple Kernels Shared keyword

1. Introduction and preparation

Post-processing effects require two textures, one read-only and the other read-write. As for where the textures come from, since it is post-processing, it must be obtained from the camera, that is, the Target Texture on the Camera component.

- Source: Read-only

- Destination: Readable and writable, used for final output

Since a variety of post-processing effects will be implemented later, a base class is abstracted to reduce the workload in the later stage.

The following features are encapsulated in the base class:

- Initialize resources (create textures, buffers, etc.)

- Manage resources (for example, recreate buffers when screen resolution changes, etc.)

- Hardware check (check whether the current device supports Compute Shader)

Abstract class complete code link: https://pastebin.com/9pYvHHsh

First, when the script instance is activated or attached to a live GO, OnEnable() is called. Write the initialization operations in it. Check whether the hardware supports it, check whether the Compute Shader is bound in the Inspector, get the specified Kernel, get the Camera component of the current GO, create a texture, and set the initialized state to true.

if (!SystemInfo.supportsComputeShaders) ... if (!shader) ... kernelHandle = shader.FindKernel(kernelName); thisCamera = GetComponent (); if (!thisCamera) ... CreateTextures(); init = true;Create two textures CreateTextures(), one Source and one Destination, with the size of the camera resolution.

texSize.x = thisCamera.pixelWidth; texSize.y = thisCamera.pixelHeight; if (shader) { uint x, y; shader.GetKernelThreadGroupSizes(kernelHandle, out x, out y, out _); groupSize.x = Mathf.CeilToInt( (float)texSize.x / (float)x); groupSize.y = Mathf.CeilToInt((float)texSize.y / (float)y); } CreateTexture(ref output); CreateTexture(ref renderedSource); shader.SetTexture(kernelHandle, "source", renderedSource); shader.SetTexture(kernelHandle, " outputrt", output);Creation of specific textures:

protected void CreateTexture(ref RenderTexture textureToMake, int divide=1) { textureToMake = new RenderTexture(texSize.x/divide, texSize.y/divide, 0); textureToMake.enableRandomWrite = true; textureToMake.Create(); }This completes the initialization. When the camera finishes rendering the scene and is ready to display it on the screen, Unity will call OnRenderImage(), and then call Compute Shader to start the calculation. If it is not initialized or there is no shader, it will be Blitted and the source will be directly copied to the destination, that is, nothing will be done. CheckResolution(out _) This method checks whether the resolution of the rendered texture needs to be updated. If so, it will regenerate the Texture. After that, it is time for the Dispatch stage. Here, the source map needs to be passed to the GPU through the Buffer, and after the calculation is completed, it will be passed back to the destination.

protected virtual void OnRenderImage(RenderTexture source, RenderTexture destination) { if (!init || shader == null) { Graphics.Blit(source, destination); } else { CheckResolution(out _); DispatchWithSource(ref source, ref destination) ; } }Note that we don't use any SetData() or GetData() operations here. Because all the data is on the GPU now, we can just instruct the GPU to do it by itself, and the CPU should not get involved. If we fetch the texture back to memory and then pass it to the GPU, the performance will be very poor.

protected virtual void DispatchWithSource(ref RenderTexture source, ref RenderTexture destination) { Graphics.Blit(source, renderedSource); shader.Dispatch(kernelHandle, groupSize.x, groupSize.y, 1); Graphics.Blit(output, destination); }I didn't believe it, so I had to transfer it back to the CPU and then back to the GPU. The test results were quite shocking, and the performance was more than 4 times worse. Therefore, we need to reduce the communication between the CPU and GPU, which is very important when using Compute Shader.

// Dumb method protected virtual void DispatchWithSource(ref RenderTexture source, ref RenderTexture destination) { // Blit the source texture to the texture for processing Graphics.Blit(source, renderedSource); // Process the texture using the compute shader shader.Dispatch(kernelHandle, groupSize.x, groupSize.y, 1); // Copy the output texture into a Texture2D object so we can read the data to the CPU Texture2D tempTexture = new Texture2D(renderedSource.width, renderedSource.height, TextureFormat.RGBA32, false); RenderTexture.active = output; tempTexture.ReadPixels(new Rect(0, 0, output.width, output.height), 0, 0); tempTexture.Apply(); RenderTexture.active = null; // Pass the Texture2D data back to the GPU to a new RenderTexture RenderTexture tempRenderTexture = RenderTexture.GetTemporary(output.width, output.height); Graphics.Blit(tempTexture, tempRenderTexture); // Finally blit the processed texture to the target texture Graphics.Blit(tempRenderTexture, destination); // Clean up resources RenderTexture.ReleaseTemporary(tempRenderTexture); Destroy(tempTexture); }

Next, we will start writing our first post-processing effect.

Interlude: Strange BUG

Also insert a strange bug.

In Compute Shader, if the final output map result is named output, there will be problems in some APIs such as Metal. The solution is to change the name.

RWTexture2D outputrt;

Add a caption for the image, no more than 140 characters (optional)

2. RingHighlight effect

Create the RingHighlight class, inheriting from the base class just written.

Overload the initialization method and specify Kernel.

protected override void Init() { center = new Vector4(); kernelName = "Highlight"; base.Init(); }Overload the rendering method. To achieve the effect of focusing on a certain character, you need to pass the coordinate center of the character's screen space to the Compute Shader. And if the screen resolution changes before Dispatch, reinitialize it.

protected void SetProperties() { float rad = (radius / 100.0f) * texSize.y; shader.SetFloat("radius", rad); shader.SetFloat("edgeWidth", rad * softenEdge / 100.0f); shader.SetFloat ("shade", shade); } protected override void OnRenderImage(RenderTexture source, RenderTexture destination) { if (!init || shader == null) { Graphics.Blit(source, destination); } else { if (trackedObject && thisCamera) { Vector3 pos = thisCamera.WorldToScreenPoint(trackedObject.position ); center.x = pos.x; center.y = pos.y; shader.SetVector("center", center); } bool resChange = false; CheckResolution(out resChange); if (resChange) SetProperties(); DispatchWithSource(ref source, ref destination); } }And when changing the Inspector panel, you can see the parameter change effect in real time and add the OnValidate() method.

private void OnValidate() { if(!init) Init(); SetProperties(); }In GPU, how can we make a circle without shadow inside, with smooth transition at the edge of the circle and shadow outside the transition layer? Based on the method of judging whether a point is inside the circle in the previous article, we can use smoothstep() to process the transition layer.

#Pragmas kernel Highlight

Texture2D<float4> source;

RWTexture2D<float4> outputrt;

float radius;

float edgeWidth;

float shade;

float4 center;

float inCircle( float2 pt, float2 center, float radius, float edgeWidth ){

float len = length(pt - center);

return 1.0 - smoothstep(radius-edgeWidth, radius, len);

}

[numthreads(8, 8, 1)]

void Highlight(uint3 id : SV_DispatchThreadID)

{

float4 srcColor = source[id.xy];

float4 shadedSrcColor = srcColor * shade;

float highlight = inCircle( (float2)id.xy, center.xy, radius, edgeWidth);

float4 color = lerp( shadedSrcColor, srcColor, highlight );

outputrt[id.xy] = color;

}

Current version code:

- Compute Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L3_RingHighlight/Assets/Shaders/RingHighlight.compute

- CPU: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L3_RingHighlight/Assets/Scripts/RingHighlight.cs

3. Blur effect

The principle of blur effect is very simple. The final effect can be obtained by taking the weighted average of the n*n pixels around each pixel sample.

But there is an efficiency problem. As we all know, reducing the number of texture sampling is very important for optimization. If each pixel needs to sample 20*20 surrounding pixels, then rendering one pixel requires 400 samplings, which is obviously unacceptable. Moreover, for a single pixel, the operation of sampling a whole rectangular pixel around it is difficult to handle in the Compute Shader. How to solve it?

The usual practice is to sample once horizontally and once vertically. What does this mean? For each pixel, only 20 pixels are sampled in the x direction and 20 pixels in the y direction, a total of 20+20 pixels are sampled, and then weighted average is taken. This method not only reduces the number of samples, but also conforms to the logic of Compute Shader. For horizontal sampling, set a kernel; for vertical sampling, set another kernel.

#pragma kernel HorzPass #pragma kernel HighlightSince Dispatch is executed sequentially, after we calculate the horizontal blur, we use the calculated result to sample vertically again.

shader.Dispatch(kernelHorzPassID, groupSize.x, groupSize.y, 1); shader.Dispatch(kernelHandle, groupSize.x, groupSize.y, 1);After completing the blur operation, combine it with the RingHighlight in the previous section, and you’re done!

One difference is, after calculating the horizontal blur, how do we pass the result to the next kernel? The answer is obvious: just use the shared keyword. The specific steps are as follows.

Declare a reference to the horizontal blurred texture in the CPU, create a kernel for the horizontal texture, and bind it.

RenderTexture horzOutput = null; int kernelHorzPassID; protected override void Init() { ... kernelHorzPassID = shader.FindKernel("HorzPass"); ... }Additional space needs to be allocated in the GPU to store the results of the first kernel.

protected override void CreateTextures() { base.CreateTextures(); shader.SetTexture(kernelHorzPassID, "source", renderedSource); CreateTexture(ref horzOutput); shader.SetTexture(kernelHorzPassID, "horzOutput", horzOutput); shader.SetTexture(kernelHandle , "horzOutput", horzOutput); }The GPU is set up like this:

shared Texture2D source; shared RWTexture2D horzOutput; RWTexture2D outputrt;Another question is, it seems that it doesn't matter whether the shared keyword is included or not. In actual testing, different kernels can access it. So what is the point of shared?

In Unity, adding shared before a variable means that this resource is not reinitialized for each call, but keeps its state for use by different shader or dispatch calls. This helps to share data between different shader calls. Marking shared can help the compiler optimize code for higher performance.

When calculating the pixels at the border, there may be a situation where the number of available pixels is insufficient. Either the remaining pixels on the left are insufficient for blurRadius, or the remaining pixels on the right are insufficient. Therefore, first calculate the safe left index, and then calculate the maximum number that can be taken from left to right.

[numthreads(8, 8, 1)] void HorzPass(uint3 id : SV_DispatchThreadID) { int left = max(0, (int)id.x-blurRadius); int count = min(blurRadius, (int)id.x) + min(blurRadius, source.Length.x - (int)id.x); float4 color = 0; uint2 index = uint2((uint)left, id.y); [unroll(100)] for(int x=0; xCurrent version code:

- Compute Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L3_BlurEffect/Assets/Shaders/BlurHighlight.compute

- CPU: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L3_BlurEffect/Assets/Scripts/BlurHighlight.cs

4. Gaussian Blur

The difference from the above is that after sampling, the average value is no longer taken, but a Gaussian function is used to weight it.

Where is the standard deviation, which controls the width.

For more Blur content: https://www.gamedeveloper.com/programming/four-tricks-for-fast-blurring-in-software-and-hardware#close-modal

Since the amount of calculation is not small, it would be very time-consuming to calculate this formula once for each pixel. We use the pre-calculation method to transfer the calculation results to the GPU through the Buffer. Since both kernels need to use it, add a shared when declaring the Buffer.

float[] SetWeightsArray(int radius, float sigma) { int total = radius * 2 + 1; float[] weights = new float[total]; float sum = 0.0f; for (int n=0; n

Full code:

- https://pastebin.com/0qWtUKgy

- https://pastebin.com/A6mDKyJE

5. Low-resolution effects

GPU: It’s really a refreshing computing experience.

Blur the edges of a high-definition texture without changing the resolution. The implementation method is very simple. For every n*n pixels, only the color of the pixel in the lower left corner is taken. Using the characteristics of integers, the id.x index is divided by n first, and then multiplied by n.

uint2 index = (uint2(id.x, id.y)/3) * 3; float3 srcColor = source[index].rgb; float3 finalColor = srcColor;The effect is already there. But the effect is too sharp, so add noise to soften the jagged edges.

uint2 index = (uint2(id.x, id.y)/3) * 3; float noise = random(id.xy, time); float3 srcColor = lerp(source[id.xy].rgb, source[index] ,noise); float3 finalColor = srcColor;

The pixel of each n*n grid no longer takes the color of the lower left corner, but takes the random interpolation result of the original color and the color of the lower left corner. The effect is much more refined. When n is relatively large, you can also see the following effect. It can only be said that it is not very good-looking, but it can still be explored in some glitch-style roads.

If you want to get a noisy picture, you can try adding coefficients at both ends of lerp, for example:

float3 srcColor = lerp(source[id.xy].rgb * 2, source[index],noise);

6. Grayscale Effects and Staining

Grayscale Effect & Tinted

The process of converting a color image to a grayscale image involves converting the RGB value of each pixel into a single color value. This color value is a weighted average of the RGB values. There are two methods here, one is a simple average, and the other is a weighted average that conforms to human eye perception.

- Average method (simple but inaccurate):

This method gives equal weight to all color channels. 2. Weighted average method (more accurate, reflects human eye perception):

This method gives different weights to different color channels based on the fact that the human eye is more sensitive to green, less sensitive to red, and least sensitive to blue. (The screenshot below doesn't look very good, I can't tell lol)

After weighting, the colors are simply mixed (multiplied) and finally lerp to obtain a controllable color intensity result.

uint2 index = (uint2(id.x, id.y)/6) * 6; float noise = random(id.xy, time); float3 srcColor = lerp(source[id.xy].rgb, source[index] ,noise); // float3 finalColor = srcColor; float3 grayScale = (srcColor.r+srcColor.g+srcColor.b)/3.0; // float3 grayScale = srcColor.r*0.299f+srcColor.g*0.587f+srcColor.b*0.114f; float3 tinted = grayScale * tintColor.rgb ; float3 finalColor = lerp(srcColor, tinted, tintStrength); outputrt[id.xy] = float4(finalColor, 1);Dye a wasteland color:

7. Screen scan line effect

First, uvY normalizes the coordinates to [0,1].

lines is a parameter that controls the number of scan lines.

Then add a time offset, and the coefficient controls the offset speed. You can open a parameter to control the speed of line offset.

float uvY = (float)id.y/(float)source.Length.y; float scanline = saturate(frac(uvY * lines + time * 3));

This "line" doesn't look quite "line" enough, lose some weight.

float uvY = (float)id.y/(float)source.Length.y; float scanline = saturate(smoothstep(0.1,0.2,frac(uvY * lines + time * 3)));

Then lerp the colors.

float uvY = (float)id.y/(float)source.Length.y; float scanline = saturate(smoothstep(0.1, 0.2, frac(uvY * lines + time*3)) + 0.3); finalColor = lerp(source [id.xy].rgb*0.5, finalColor, scanline);

Before and after “weight loss”, each gets what they need!

8. Night Vision Effect

This section summarizes all the above content and realizes the effect of a night vision device. First, make a single-eye effect.

float2 pt = (float2)id.xy; float2 center = (float2)(source.Length >> 1); float inVision = inCircle(pt, center, radius, edgeWidth); float3 blackColor = float3(0,0,0) ; finalColor = lerp(blackColor, finalColor, inVision);

The difference between the binocular effect and the binocular effect is that there are two centers of the circle. The two calculated masks can be merged using max() or saturate().

float2 pt = (float2)id.xy; float2 centerLeft = float2(source.Length.x / 3.0, source.Length.y /2); float2 centerRight = float2(source.Length.x / 3.0 * 2.0, source.Length .y /2); float inVisionLeft = inCircle(pt, centerLeft, radius, edgeWidth); float inVisionRight = inCircle(pt, centerRight, radius, edgeWidth); float3 blackColor = float3(0,0,0); // float inVision = max(inVisionLeft, inVisionRight); float inVision = saturate(inVisionLeft + inVisionRight); finalColor = lerp (blackColor, finalColor, inVision);

Current version code:

- Compute Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L3_NightVision/Assets/Shaders/NightVision.compute

- CPU: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L3_NightVision/Assets/Scripts/NightVision.cs

9. Smooth transition lines

Think about how we should draw a smooth straight line on the screen.

The smoothstep() function can do this. Readers familiar with this function can skip this section. This function is used to create a smooth gradient. The smoothstep(edge0, edge1, x) function outputs a gradient from 0 to 1 when x is between edge0 and edge1. If x < edge0, it returns 0; if x > edge1, it returns 1. Its output value is calculated based on Hermite interpolation:

float onLine(float position, float center, float lineWidth, float edgeWidth) { float halfWidth = lineWidth / 2.0; float edge0 = center - halfWidth - edgeWidth; float edge1 = center - halfWidth; float edge2 = center + halfWidth; float edge3 = center + halfWidth + edgeWidth; return smoothstep(edge0, edge1, position) - smoothstep(edge2, edge3, position); }In the above code, the parameters passed in have been normalized to [0,1]. position is the position of the point under investigation, center is the center of the line, lineWidth is the actual width of the line, and edgeWidth is the width of the edge, which is used for smooth transition. I am really unhappy with my ability to express myself! As for how to calculate it, I will draw a picture for you to understand!

It's probably:,,.

Think about how to draw a circle with a smooth transition.

For each point, first calculate the distance vector to the center of the circle and return the result to position, and then calculate its length and return it to len.

Imitating the difference method of the above two smoothsteps, a ring line effect is generated by subtracting the outer edge interpolation result.

float circle(float2 position, float2 center, float radius, float lineWidth, float edgeWidth){ position -= center; float len = length(position); //Change true to false to soften the edge float result = smoothstep(radius - lineWidth / 2.0 - edgeWidth, radius - lineWidth / 2.0, len) - smoothstep(radius + lineWidth / 2.0, radius + lineWidth / 2.0 + edgeWidth, len); return result; }

10. Scanline Effect

Then add a horizontal line, a vertical line, and a few circles to create a radar scanning effect.

float3 color = float3(0.0f,0.0f,0.0f); color += onLine(uv.y, center.y, 0.002, 0.001) * axisColor.rgb;//xAxis color += onLine(uv.x, center .x, 0.002, 0.001) * axisColor.rgb;//yAxis color += circle(uv, center, 0.2f, 0.002, 0.001) * axisColor.rgb; color += circle(uv, center, 0.3f, 0.002, 0.001) * axisColor.rgb; color += circle(uv, center, 0.4f , 0.002, 0.001) * axisColor.rgb;Draw another scan line with a trajectory.

float sweep(float2 position, float2 center, float radius, float lineWidth, float edgeWidth) { float2 direction = position - center; float theta = time + 6.3; float2 circlePoint = float2(cos(theta), -sin(theta)) * radius; float projection = clamp(dot(direction, circlePoint) / dot(circlePoint, circlePoint), 0.0, 1.0); float lineDistance = length(direction - circlePoint * projection); float gradient = 0.0; const float maxGradientAngle = PI * 0.5; if (length(direction) < radius) { float angle = fmod(theta + atan2(direction.y, direction.x), PI2); gradient = clamp(maxGradientAngle - angle, 0.0, maxGradientAngle) / maxGradientAngle * 0.5; } return gradient + 1.0 - smoothstep(lineWidth, lineWidth + edgeWidth, lineDistance); }Add to the color.

... color += sweep(uv, center, 0.45f, 0.003, 0.001) * sweepColor.rgb; ...

Current version code:

- Compute Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L3_HUDOverlay/Assets/Shaders/HUDOverlay.compute

- CPU: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L3_HUDOverlay/Assets/Scripts/HUDOverlay.cs

11. Gradient background shadow effect

This effect can be used in subtitles or some explanatory text. Although you can directly add a texture to the UI Canvas, using Compute Shader can achieve more flexible effects and resource optimization.

The background of subtitles and dialogue text is usually at the bottom of the screen, and the top is not processed. At the same time, a higher contrast is required, so the original picture is grayed out and a shadow is specified.

if (id.y<(uint)tintHeight){ float3 grayScale = (srcColor.r + srcColor.g + srcColor.b) * 0.33 * tintColor.rgb; float3 shaded = lerp(srcColor.rgb, grayScale, tintStrength) * shade ; ... // Continue}else{ color = srcColor; }

Gradient effect.

...// Continue from the previous text float srcAmount = smoothstep(tintHeight-edgeWidth, (float)tintHeight, (float)id.y); ...// Continue from the following text

Finally, lerp it up again.

...// Continue from the previous text color = lerp(float4(shaded, 1), srcColor, srcAmount);

12. Summary/Quiz

If id.xy = [ 100, 30 ]. What would be the return value of inCircle((float2)id.xy, float2(130, 40), 40, 0.1)

When creating a blur effect which answer describes our approach best?

Which answer would create a blocky low resolution version of the source image?

What is smoothstep(5, 10, 6); ?

If an and b are both vectors. Which answer best describes dot(a,b)/dot(b,b); ?

What is _MainTex_TexelSize.x? If _MainTex is 512 x 256 pixel resolution.

13. Use Blit and Material for post-processing

In addition to using Compute Shader for post-processing, there is another simple method.

// .cs Graphics.Blit(source, dest, material, passIndex); // .shader Pass{ CGPROGRAM #pragma vertex vert_img #pragma fragment frag fixed4 frag(v2f_img input) : SV_Target{ return tex2D(_MainTex, input.uv); } ENDCG }Image data is processed by combining Shader.

So the question is, what is the difference between the two? And isn't the input a texture? Where do the vertices come from?

answer:

The first question. This method is called "screen space shading" and is fully integrated into Unity's graphics pipeline. Its performance is actually higher than Compute Shader. Compute Shader provides finer-grained control over GPU resources. It is not restricted by the graphics pipeline and can directly access and modify resources such as textures and buffers.

The second question. Pay attention to vert_img. In UnityCG, you can find the following definition:

Unity will automatically convert the incoming texture into two triangles (a rectangle that fills the screen). When we write post-processing using the material method, we can just write it directly on the frag.

In the next chapter, you will learn how to connect Material, Shader, Compute Shader and C#.

Leave a Reply