Following the previous article

remoooo: Compute Shader Learning Notes (II) Post-processing Effects

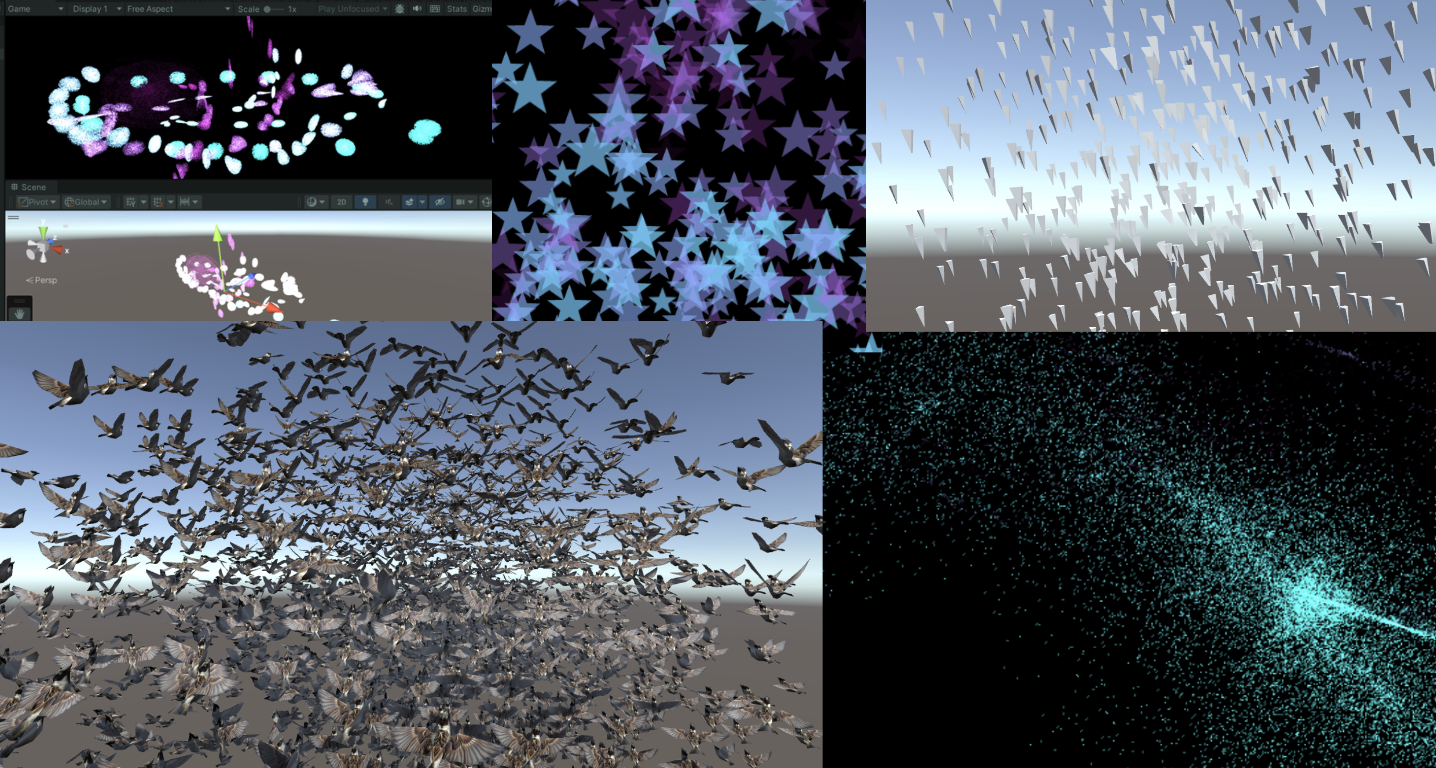

L4 particle effects and crowd behavior simulation

This chapter uses Compute Shader to generate particles. Learn how to use DrawProcedural and DrawMeshInstancedIndirect, also known as GPU Instancing.

Summary of knowledge points:

- Compute Shader, Material, C# script and Shader work together

- Graphics.DrawProcedural

- material.SetBuffer()

- xorshift random algorithm

- Swarm Behavior Simulation

- Graphics.DrawMeshInstancedIndirect

- Rotation, translation, and scaling matrices, homogeneous coordinates

- Surface Shader

- ComputeBufferType.Default

- #pragma instancing_options procedural:setup

- unity_InstanceID

- Skinned Mesh Renderer

- Data alignment

1. Introduction and preparation

In addition to being able to process large amounts of data at the same time, Compute Shader also has a key advantage, which is that the Buffer is stored in the GPU. Therefore, the data processed by the Compute Shader can be directly passed to the Shader associated with the Material, that is, the Vertex/Fragment Shader. The key here is that the material can also SetBuffer() like the Compute Shader, accessing data directly from the GPU's Buffer!

Using Compute Shader to create a particle system can fully demonstrate the powerful parallel capabilities of Compute Shader.

During the rendering process, the Vertex Shader reads the position and other attributes of each particle from the Compute Buffer and converts them into vertices on the screen. The Fragment Shader is responsible for generating pixels based on the information of these vertices (such as position and color). Through the Graphics.DrawProcedural method, Unity canDirect RenderingThese vertices processed by the Shader do not require a pre-defined mesh structure and do not rely on the Mesh Renderer, which is particularly effective for rendering a large number of particles.

2. Hello Particle

The steps are also very simple. Define the particle information (position, speed and life cycle) in C#, initialize and pass the data to Buffer, bind Buffer to Compute Shader and Material. In the rendering stage, call Graphics.DrawProceduralNow in OnRenderObject() to achieve efficient particle rendering.

Create a new scene and create an effect: millions of particles follow the mouse and bloom into life, as follows:

Writing this makes me think a lot. The life cycle of a particle is very short, ignited in an instant like a spark, and disappearing like a meteor. Despite thousands of hardships, I am just a speck of dust among billions of dust, ordinary and insignificant. These particles may float randomly in space (Use the "Xorshift" algorithm to calculate the position of particle spawning), may have unique colors, but they can't escape the fate of being programmed. Isn't this a portrayal of my life? I play my role step by step, unable to escape the invisible constraints.

“God is dead! And how can we who have killed him not feel the greatest pain?” – Friedrich Nietzsche

Nietzsche not only announced the disappearance of religious beliefs, but also pointed out the sense of nothingness faced by modern people, that is, without the traditional moral and religious pillars, people feel unprecedented loneliness and lack of direction. Particles are defined and created in the C# script, move and die according to specific rules, which is quite similar to the state of modern people in the universe described by Nietzsche. Although everyone tries to find their own meaning, they are ultimately restricted by broader social and cosmic rules.

Life is full of various inevitable pains, reflecting the inherent emptiness and loneliness of human existence.Particle death logic to be writtenAll of these confirm what Nietzsche said: nothing in life is permanent. The particles in the same buffer will inevitably disappear at some point in the future, which reflects the loneliness of modern people described by Nietzsche. Individuals may feel unprecedented isolation and helplessness, so everyone is a lonely warrior who must learn to face the inner tornado and the indifference of the outside world alone.

But it doesn’t matter, “Summer will come again and again, and those who are meant to meet will meet again.” The particles in this article will also be regenerated after the end, embracing their own Buffer in the best state.

Summer will come around again. People who meet will meet again.

The current version of the code can be copied and run by yourself (all with comments):

- Compute Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_First_Particle/Assets/Shaders/ParticleFun.compute

- CPU: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_First_Particle/Assets/Scripts/ParticleFun.cs

- Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_First_Particle/Assets/Shaders/Particle.shader

Enough of the nonsense, let’s first take a look at how the C# script is written.

As usual, first define the particle buffer (structure), initialize it, and then pass it to the GPU.The key lies in the last three lines that bind the Buffer to the shader operation.There is nothing much to say about the code in the ellipsis below. They are all routine operations, so they are just mentioned with comments.

struct Particle{ public Vector3 position; // Particle positionpublic Vector3 velocity; // Particle velocitypublic float life; // Particle life cycle } ComputeBuffer particleBuffer; // GPU Buffer ... // Init() // Initialize particle array Particle[] particleArray = new Particle[particleCount]; for (int i = 0; i < particleCount; i++){ // Generate random positions and normalize... // Set the initial position and velocity of the particle... // Set the life cycle of the particle particleArray[i].life = Random.value * 5.0f + 1.0f; } // Create and set up the Compute Buffer ... // Find the kernel ID in the Compute Shader ... // Bind the Compute Buffer to the shader shader.SetBuffer(kernelID, "particleBuffer", particleBuffer); material.SetBuffer("particleBuffer", particleBuffer); material.SetInt("_PointSize", pointSize);The key rendering stage is OnRenderObject(). material.SetPass is used to set the rendering material channel. The DrawProceduralNow method draws geometry without using traditional meshes. MeshTopology.Points specifies the topology type of the rendering as points. The GPU will treat each vertex as a point and will not form lines or faces between vertices. The second parameter 1 means starting drawing from the first vertex. particleCount specifies the number of vertices to render, which is the number of particles, that is, telling the GPU how many points need to be rendered in total.

void OnRenderObject() { material.SetPass(0); Graphics.DrawProceduralNow(MeshTopology.Points, 1, particleCount); }Get the current mouse position method. OnGUI() This method may be called multiple times per frame. The z value is set to the camera's near clipping plane plus an offset. Here, 14 is added to get a world coordinate that is more suitable for visual depth (you can also adjust it yourself).

void OnGUI() { Vector3 p = new Vector3(); Camera c = Camera.main; Event e = Event.current; Vector2 mousePos = new Vector2(); // Get the mouse position from Event. // Note that the y position from Event is inverted. mousePos.x = e.mousePosition.x; mousePos.y = c.pixelHeight - e.mousePosition.y; p = c.ScreenToWorldPoint(new Vector3(mousePos.x, mousePos.y, c.nearClipPlane + 14)); cursorPos.x = px; cursorPos.y = py; }ComputeBuffer particleBuffer has been passed to Compute Shader and Shader above.

Let's first look at the data structure of the Compute Shader. Nothing special.

// Define particle data structure struct Particle { float3 position; // particle position float3 velocity; // particle velocity float life; // particle remaining life time }; // Structured buffer used to store and update particle data, which can be read and written from GPU RWStructuredBuffer particleBuffer; // Variables set from the CPU float deltaTime; // Time difference from the previous frame to the current frame float2 mousePosition; // Current mouse position

Here I will briefly talk about a particularly useful random number sequence generation method, the xorshift algorithm. It will be used to randomly control the movement direction of particles as shown above. The particles will move randomly in three-dimensional directions.

- For more information, please refer to: https://en.wikipedia.org/wiki/Xorshift

- Original paper link: https://www.jstatsoft.org/article/view/v008i14

This algorithm was proposed by George Marsaglia in 2003. Its advantages are that it is extremely fast and very space-efficient. Even the simplest Xorshift implementation has a very long pseudo-random number cycle.

The basic operations are shift and XOR. Hence the name of the algorithm. Its core is to maintain a non-zero state variable and generate random numbers by performing a series of shift and XOR operations on this state variable.

// State variable for random number generation uint rng_state; uint rand_xorshift() { // Xorshift algorithm from George Marsaglia's paper rng_state ^= (rng_state << 13); // Shift the state variable left by 13 bits, then XOR it with the original state rng_state ^= (rng_state >> 17); // Shift the updated state variable right by 17 bits, and XOR it again rng_state ^= (rng_state << 5); // Finally, shift the state variable left by 5 bits, and XOR it one last time return rng_state; // Return the updated state variable as the generated random number }Basic Xorshift The core of the algorithm has been explained above, but different shift combinations can create multiple variants. The original paper also mentions the Xorshift128 variant. Using a 128-bit state variable, the state is updated by four different shifts and XOR operations. The code is as follows:

// c language Ver uint32_t xorshift128(void) { static uint32_t x = 123456789; static uint32_t y = 362436069; static uint32_t z = 521288629; static uint32_t w = 88675123; uint32_t t = x ^ (x << 11); x = y; y = z; z = w; w = w ^ (w >> 19) ^ (t ^ (t >> 8)); return w; }This can produce longer periods and better statistical performance. The period of this variant is close, which is very impressive.

In general, this algorithm is completely sufficient for game development, but it is not suitable for use in fields such as cryptography.

When using this algorithm in Compute Shader, you need to pay attention to the range of random numbers generated by the Xorshift algorithm when it is the range of uint32, and you need to do another mapping ([0, 2^32-1] is mapped to [0, 1]):

float tmp = (1.0 / 4294967296.0); // conversion factor rand_xorshift()) * tmpThe direction of particle movement is signed, so we just need to subtract 0.5 from it. Random movement in three directions:

float f0 = float(rand_xorshift()) * tmp - 0.5; float f1 = float(rand_xorshift()) * tmp - 0.5; float f2 = float(rand_xorshift()) * tmp - 0.5; float3 normalF3 = normalize(float3(f0, f1, f2)) * 0.8f; // Scaled the direction of movementEach Kernel needs to complete the following:

- First get the particle information of the previous frame in the Buffer

- Maintain particle buffer (calculate particle velocity, update position and health value), write back to buffer

- If the health value is less than 0, regenerate a particle

Generate particles. Use the random number obtained by Xorshift just now to define the particle's health value and reset its speed.

// Set the new position and life of the particle particleBuffer[id].position = float3(normalF3.x + mousePosition.x, normalF3.y + mousePosition.y, normalF3.z + 3.0); particleBuffer[id].life = 4; // Reset life particleBuffer[id].velocity = float3(0,0,0); // Reset velocityFinally, the basic data structure of Shader:

struct Particle{ float3 position; float3 velocity; float life; }; struct v2f{ float4 position : SV_POSITION; float4 color : COLOR; float life : LIFE; float size: PSIZE; }; // particles' data StructuredBuffer particleBuffer;Then the vertex shader calculates the vertex color of the particle, the Clip position of the vertex, and transmits the information of a vertex size.

v2f vert(uint vertex_id : SV_VertexID, uint instance_id : SV_InstanceID){ v2f o = (v2f)0; // Color float life = particleBuffer[instance_id].life; float lerpVal = life * 0.25f; o.color = fixed4(1.0 f - lerpVal+0.1, lerpVal+0.1, 1.0f, lerpVal); // Position o.position = UnityObjectToClipPos(float4(particleBuffer[instance_id].position, 1.0f)); o.size = _PointSize; return o; }The fragment shader calculates the interpolated color.

float4 frag(v2f i) : COLOR{ return i.color; }At this point, you can get the above effect.

3. Quad particles

In the previous section, each particle only had one point, which was not interesting. Now let's turn a point into a Quad. In Unity, there is no Quad, only a fake Quad composed of two triangles.

Let's start working on it, based on the code above. Define the vertices in C#, the size of a Quad.

// struct struct Vertex { public Vector3 position; public Vector2 uv; public float life; } const int SIZE_VERTEX = 6 * sizeof(float); public float quadSize = 0.1f; // Quad size

On a per-particle basis, set the UV coordinates of the six vertices for use in the vertex shader, and draw them in the order specified by Unity.

index = i*6; //Triangle 1 - bottom-left, top-left, top-right vertexArray[index].uv.Set(0,0); vertexArray[index+1].uv.Set(0,1 ); vertexArray[index+2].uv.Set(1,1); //Triangle 2 - bottom-left, top-right, bottom-right vertexArray[index+3].uv.Set(0,0); vertexArray[index+4].uv.Set(1,1); vertexArray[index+5].uv.Set(1,0);Finally, it is passed to Buffer. The halfSize here is used to pass to Compute Shader to calculate the positions of each vertex of Quad.

vertexBuffer = new ComputeBuffer(numVertices, SIZE_VERTEX); vertexBuffer.SetData(vertexArray); shader.SetBuffer(kernelID, "vertexBuffer", vertexBuffer); shader.SetFloat("halfSize", quadSize*0.5f); material.SetBuffer("vertexBuffer ", vertexBuffer);During the rendering phase, the points are changed into triangles with six points.

void OnRenderObject() { material.SetPass(0); Graphics.DrawProceduralNow(MeshTopology.Triangles, 6, numParticles); }Change the settings in the Shader to receive vertex data and a texture for display. Alpha culling is required.

_MainTex("Texture", 2D) = "white" {} ... Tags{ "Queue"="Transparent" "RenderType"="Transparent" "IgnoreProjector"="True" } LOD 200 Blend SrcAlpha OneMinusSrcAlpha ZWrite Off .. . struct Vertex{ float3 position; float2 uv; float life; }; StructuredBuffer vertexBuffer; sampler2D _MainTex; v2f vert(uint vertex_id : SV_VertexID, uint instance_id : SV_InstanceID) { v2f o = (v2f)0; int index = instance_id*6 + vertex_id; float lerpVal = vertexBuffer[index].life * 0.25f; o .color = fixed4(1.0f - lerpVal+0.1, lerpVal+0.1, 1.0f, lerpVal); o.position = UnityWorldToClipPos(float4(vertexBuffer[index].position, 1.0f)); o.uv = vertexBuffer[index].uv; return o; } float4 frag(v2f i) : COLOR { fixed4 color = tex2D( _MainTex, i.uv ) * i.color; return color; }In the Compute Shader, add receiving vertex data and halfSize.

struct Vertex { float3 position; float2 uv; float life; }; RWStructuredBuffer vertexBuffer; float halfSize;Calculate the positions of the six vertices of each Quad.

//Set the vertex buffer // int index = id.x * 6; //Triangle 1 - bottom-left, top-left, top-right vertexBuffer[index].position.x = p.position.x-halfSize; vertexBuffer[index].position.y = p.position.y-halfSize; vertexBuffer[index].position.z = p.position.z; vertexBuffer[index].life = p.life; vertexBuffer[index+1].position.x = p.position.x-halfSize; vertexBuffer[index+1].position.y = p.position.y+halfSize; vertexBuffer[index+1].position.z = p .position.z; vertexBuffer[index+1].life = p.life; vertexBuffer[index+2].position.x = p.position.x+halfSize; vertexBuffer[index+2].position.y = p.position.y+halfSize; vertexBuffer[index+2].position.z = p.position.z; vertexBuffer[index+2].life = p.life; //Triangle 2 - bottom-left, top-right, bottom-right // // vertexBuffer[index+3].position.x = p.position.x-halfSize; vertexBuffer[index+3].position.y = p.position.y-halfSize; vertexBuffer[index+3].position.z = p.position.z; vertexBuffer[index+3].life = p.life; vertexBuffer[index+4].position.x = p.position.x+halfSize; vertexBuffer[index+4].position.y = p.position.y+halfSize ; vertexBuffer[index+4].position.z = p.position.z; vertexBuffer[index+4].life = p.life; vertexBuffer[index+5].position.x = p.position.x+halfSize; vertexBuffer[index+5].position.y = p.position.y-halfSize; vertexBuffer[index+5].position.z = p.position.z; vertexBuffer[index+5].life = p.life;Mission accomplished.

Current version code:

- Compute Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_Quad/Assets/Shaders/QuadParticles.compute

- CPU: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_Quad/Assets/Scripts/QuadParticles.cs

- Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_Quad/Assets/Shaders/QuadParticle.shader

In the next section, we will upgrade the Mesh to a prefab and try to simulate the flocking behavior of birds in flight.

4. Flocking simulation

Flocking is an algorithm that simulates the collective movement of animals such as flocks of birds and schools of fish in nature. The core is based on three basic behavioral rules, proposed by Craig Reynolds in Sig 87, and is often referred to as the "Boids" algorithm:

- Separation Particles cannot be too close to each other, and there must be a sense of boundary. Specifically, the particles with a certain radius around them are calculated and then a direction is calculated to avoid collision.

- Alignment The speed of an individual tends to the average speed of the group, and there should be a sense of belonging. Specifically, the average speed of particles within the visual range is calculated (the speed size direction). This visual range is determined by the actual biological characteristics of the bird, which will be mentioned in the next section.

- Cohesion The position of the individual particles tends to the average position (the center of the group) to feel safe. Specifically, each particle finds the geometric center of its neighbors and calculates a moving vector (the final result is the averageLocation).

Think about it, which of the above three rules is the most difficult to implement?

Answer: Separation. As we all know, calculating collisions between objects is very difficult to achieve. Because each individual needs to compare distances with all other individuals, this will cause the time complexity of the algorithm to be close to O(n^2), where n is the number of particles. For example, if there are 1,000 particles, then nearly 500,000 distance calculations may be required in each iteration. In the original paper, the author took 95 seconds to render one frame (80 birds) in the original unoptimized algorithm (time complexity O(N^2)), and it took nearly 9 hours to render a 300-frame animation.

Generally speaking, using a quadtree or spatial hashing method can optimize the calculation. You can also maintain a neighbor list to store the individuals around each individual at a certain distance. Of course, you can also use Compute Shader to perform hard calculations.

Without further ado, let’s get started.

First download the prepared project files (if not prepared in advance):

- Bird's Prefab: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/main/Assets/Prefabs/Boid.prefab

- Script: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/main/Assets/Scripts/SimpleFlocking.cs

- Compute Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/main/Assets/Shaders/SimpleFlocking.compute

Then add it to an empty GO.

Start the project and you'll see a bunch of birds.

Below are some parameters for group behavior simulation.

// Define the parameters for the crowd behavior simulation. public float rotationSpeed = 1f; // Rotation speed. public float boidSpeed = 1f; // Boid speed. public float neighbourDistance = 1f; // Neighboring distance. public float boidSpeedVariation = 1f; // Speed variation. public GameObject boidPrefab; // Prefab of Boid object. public int boidsCount; // Number of Boids. public float spawnRadius; // Radius of Boid spawn. public Transform target; // The moving target of the crowd.Except for the Boid prefab boidPrefab and the spawn radius spawnRadius, everything else needs to be passed to the GPU.

For the sake of convenience, let’s make a foolish mistake in this section. We will only calculate the bird’s position and direction on the GPU, and then pass it back to the CPU for the following processing:

... boidsBuffer.GetData(boidsArray); // Update the position and direction of each bird for (int i = 0; i < boidsArray.Length; i++){ boids[i].transform.localPosition = boidsArray[i].position; if (!boidsArray[i].direction.Equals(Vector3.zero)){ boids[i].transform.rotation = Quaternion.LookRotation(boidsArray[i].direction); } }The Quaternion.LookRotation() method is used to create a rotation so that an object faces a specified direction.

Calculate the position of each bird in the Compute Shader.

#pragma kernel CSMain #define GROUP_SIZE 256 struct Boid{ float3 position; float3 direction; }; RWStructuredBuffer boidsBuffer; float time; float deltaTime; float rotationSpeed; float boidSpeed; float boidSpeedVariation; float3 flockPosition; float neighborDistance; int boidsCount;

[numthreads(GROUP_SIZE,1,1)]

void CSMain (uint3 id : SV_DispatchThreadID) { … // Continue below }

First write the logic of alignment and aggregation, and finally output the actual position and direction to the Buffer.

Boid boid = boidsBuffer[id.x]; float3 separation = 0; // Separation float3 alignment = 0; // Alignment - direction float3 cohesion = flockPosition; // Aggregation - position uint nearbyCount = 1; // Count itself as a surrounding individual. for (int i=0; iThis is the result of having no sense of boundaries (separation terms), all individuals appear to have a fairly close relationship and overlap.

Add the following code.

if(distance(boid.position, temp.position)< neighborDistance) { float3 offset = boid.position - temp.position; float dist = length(offset); if(dist < neighborDistance) { dist = max(dist, 0.000001) ; separation += offset * (1.0/dist - 1.0/neighbourDistance); } ...1.0/dist When the Boids are closer together, this value is larger, indicating that the separation force should be greater. 1.0/neighbourDistance is a constant based on the defined neighbor distance. The difference between the two represents how much the actual separation force responds to the distance. If the distance between the two Boids is exactly neighborDistance, this value is zero (no separation force). If the distance between the two Boids is less than neighborDistance, this value is positive, and the smaller the distance, the larger the value.

Current code: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_Flocking/Assets/Shaders/SimpleFlocking.compute

The next section will use Instanced Mesh to improve performance.

5. GPU Instancing Optimization

First, let's review the content of this chapter. In both the "Hello Particle" and "Quad Particle" examples, we used the Instanced technology (Graphics.DrawProceduralNow()) to pass the particle position calculated by the Compute Shader directly to the VertexFrag shader.

DrawMeshInstancedIndirect used in this section is used to draw a large number of geometric instances. The instances are similar, but the positions, rotations or other parameters are slightly different. Compared with DrawProceduralNow, which regenerates the geometry and renders it every frame, DrawMeshInstancedIndirect only needs to set the instance information once, and then the GPU can render all instances at once based on this information. Use this function to render grass and groups of animals.

This function has many parameters, only some of which are used.

Graphics.DrawMeshInstancedIndirect(boidMesh, 0, boidMaterial, bounds, argsBuffer);- boidMesh: Throw the bird Mesh in.

- subMeshIndex: The submesh index to draw. Usually 0 if the mesh has only one submesh.

- boidMaterial: The material applied to the instanced object.

- Bounds: The bounding box specifies the drawing range. The instantiated object will only be rendered in the area within this bounding box. Used to optimize performance.

- argsBuffer: ComputeBuffer of parameters, including the number of indices of each instance's geometry and the number of instances.

What is this argsBuffer? This parameter is used to tell Unity which mesh we want to render and how many meshes we want to render! We can use a special Buffer as a parameter.

When initializing the shader, a special Buffer is created, which is labeled ComputeBufferType.IndirectArguments. This type of buffer is specifically used to pass to the GPU so that indirect drawing commands can be executed on the GPU. The first parameter of new ComputeBuffer here is 1, which represents an args array (an array has 5 uints). Don't get it wrong.

ComputeBuffer argsBuffer; ... argsBuffer = new ComputeBuffer(1, 5 * sizeof(uint), ComputeBufferType.IndirectArguments); if (boidMesh != null) { args[0] = (uint)boidMesh.GetIndexCount(0); args[ 1] = (uint)numOfBoids; } argsBuffer.SetData(args); ... Graphics.DrawMeshInstancedIndirect(boidMesh, 0, boidMaterial, bounds, argsBuffer);Based on the previous chapter, an offset is added to the individual data structure, which is used for the direction offset in the Compute Shader. In addition, the direction of the initial state is interpolated using Slerp, 70% keeps the original direction, and 30% is random. The result of Slerp interpolation is a quaternion, which needs to be converted to Euler angles using the quaternion method and then passed into the constructor.

public float noise_offset; ... Quaternion rot = Quaternion.Slerp(transform.rotation, Random.rotation, 0.3f); boidsArray[i] = new Boid(pos, rot.eulerAngles, offset);After passing this new attribute noise_offset to the Compute Shader, a noise value in the range [-1, 1] is calculated and applied to the bird's speed.

float noise = clamp(noise1(time / 100.0 + boid.noise_offset), -1, 1) * 2.0 - 1.0; float velocity = boidSpeed * (1.0 + noise * boidSpeedVariation);Then we optimized the algorithm a bit. Compute Shader is basically the same.

if (distance(boid_pos, boidsBuffer[i].position) < neighborDistance) { float3 tempBoid_position = boidsBuffer[i].position; float3 offset = boid.position - tempBoid_position; float dist = length(offset); if (distThe biggest difference is in the shader. This section uses a surface shader instead of a fragment. This is actually a packaged vertex and fragment shader. Unity has already done a lot of tedious work such as lighting and shadows. You can still specify a vertice.

When writing shaders to make materials, you need to do special processing for instanced objects. Because the positions, rotations and other properties of ordinary rendering objects are static in Unity. For the instantiated objects to be built, their positions, rotations and other parameters are constantly changing. Therefore, a special mechanism is needed in the rendering pipeline to dynamically set the position and parameters of each instantiated object. The current method is based on the instantiation technology of the program, which can render all instantiated objects at once without drawing them one by one. That is, one-time batch rendering.

The shader uses the instanced technique. The instantiation phase is executed before vert. This way each instantiated object has its own rotation, translation, and scaling matrices.

Now we need to create a rotation matrix for each instantiated object. From the Buffer, we get the basic information of the bird calculated by the Compute Shader (in the previous section, the data was sent back to the CPU, and here it is directly sent to the Shader for instantiation):

In Shader, the data structure and related operations passed by Buffer are wrapped with the following macros.

// .shader #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED struct Boid { float3 position; float3 direction; float noise_offset; }; StructuredBuffer boidsBuffer; #endifSince I only specified the number of birds to be instantiated (the number of birds, which is also the size of the Buffer) in args[1] of DrawMeshInstancedIndirect of C#, I can directly access the Buffer using the unity_InstanceID index.

#pragma instancing_options procedural:setup void setup() { #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED _BoidPosition = boidsBuffer[unity_InstanceID].position; _Matrix = create_matrix(boidsBuffer[unity_InstanceID].position, boidsBuffer[unity_InstanceID].direction, float3(0.0, 1.0, 0.0)); #endif }The calculation of the space transformation matrix here involvesHomogeneous Coordinates, you can review the GAMES101 course. The point is (x,y,z,1) and the coordinates are (x,y,z,0).

If you use affine transformations, the code is as follows:

void setup() { #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED _BoidPosition = boidsBuffer[unity_InstanceID].position; _LookAtMatrix = look_at_matrix(boidsBuffer[unity_InstanceID].direction, float3(0.0, 1.0, 0.0)); #endif } void vert(inout appdata_full v, out Input data) { UNITY_INITIALIZE_OUTPUT(Input, data); #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED v.vertex = mul(_LookAtMatrix, v.vertex); v.vertex.xyz += _BoidPosition; #endif }Not elegant enough, we can just use homogeneous coordinates. One matrix handles rotation, translation and scaling!

void setup() { #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED _BoidPosition = boidsBuffer[unity_InstanceID].position; _Matrix = create_matrix(boidsBuffer[unity_InstanceID].position, boidsBuffer[unity_InstanceID].direction, float3(0.0, 1.0, 0.0)); #endif } void vert(inout appdata_full v, out Input data) { UNITY_INITIALIZE_OUTPUT(Input, data); #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED v.vertex = mul(_Matrix, v.vertex); #endif }Now, we are done! The current frame rate is nearly doubled compared to the previous section.

Current version code:

- Compute Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_Instanced/Assets/Shaders/InstancedFlocking.compute

- CPU: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_Instanced/Assets/Scripts/InstancedFlocking.cs

- Shader: https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L4_Instanced/Assets/Shaders/InstancedFlocking.shader

6. Apply skin animation

What we need to do in this section is to use the Animator component to grab the Mesh of each keyframe into the Buffer before instantiating the object. By selecting different indexes, we can get Mesh of different poses. The specific skeletal animation production is beyond the scope of this article.

You just need to modify the code based on the previous chapter and add the Animator logic. I have written comments below, you can take a look.

And the individual data structure is updated:

struct Boid{ float3 position; float3 direction; float noise_offset; float speed; // not useful for now float frame; // indicates the current frame index in the animation float3 padding; // ensure data alignment };Let's talk about alignment in detail. In a data structure, the size of the data should preferably be an integer multiple of 16 bytes.

- float3 position; (12 bytes)

- float3 direction; (12 bytes)

- float noise_offset; (4 bytes)

- float speed; (4 bytes)

- float frame; (4 bytes)

- float3 padding; (12 bytes)

Without padding, the size is 36 bytes, which is not a common alignment size. With padding, the alignment is 48 bytes, perfect!

private SkinnedMeshRenderer boidSMR; // Used to reference the SkinnedMeshRenderer component that contains the skinned mesh. private Animator animator; public AnimationClip animationClip; // Specific animation clips, usually used to calculate animation-related parameters. private int numOfFrames; // The number of frames in the animation, used to determine how many frames of data to store in the GPU buffer. public float boidFrameSpeed = 10f; // Controls the speed at which the animation plays. MaterialPropertyBlock props; // Pass parameters to the shader without creating a new material instance. This means that the material properties of the instance (such as color, lighting coefficient, etc.) can be changed without affecting other objects using the same material. Mesh boidMesh; // Stores the mesh data baked from the SkinnedMeshRenderer. ... void Start(){ // First initialize the Boid data here, then call GenerateSkinnedAnimationForGPUBuffer to prepare the animation data, and finally call InitShader to set the Shader parameters required for rendering. ... // This property block is used only for avoiding an instancing bug. props = new MaterialPropertyBlock(); props.SetFloat("_UniqueID", Random.value); ... InitBoids(); GenerateSkinnedAnimationForGPUBuffer(); InitShader(); } void InitShader(){ // This method configures the Shader and material properties to ensure that the animation playback can be displayed correctly according to the different stages of the instance. Enabling or disabling frameInterpolation determines whether to interpolate between animation frames for smoother animation effects. ... if (boidMesh)//Set by the GenerateSkinnedAnimationForGPUBuffer ... shader.SetFloat("boidFrameSpeed", boidFrameSpeed); shader.SetInt("numOfFrames", numOfFrames); boidMaterial.SetInt("numOfFrames", numOfFrames); if (frameInterpolation && !boidMaterial.IsKeywordEnabled("FRAME_INTERPOLATION")) boidMaterial.EnableKeyword("FRAME_INTERPOLATION"); if (!frameInterpolation && boidMaterial.IsKeywordEnabled("FRAME_INTERPOLATION")) boidMaterial.DisableKeyword("FRAME_INTERPOLATION"); } void Update(){ ... // The last two parameters: // 1. 0: Offset into the parameter buffer, used to specify where to start reading parameters. // 2. props: The MaterialPropertyBlock created earlier, containing properties shared by all instances. Graphics.DrawMeshInstancedIndirect( boidMesh, 0, boidMaterial, bounds, argsBuffer, 0, props); } void OnDestroy(){ ... if (vertexAnimationBuffer != null) vertexAnimationBuffer.Release(); } private void GenerateSkinnedAnimationForGPUBuffer() { ... // Continued }In order to provide the Shader with Mesh with different postures at different times, the mesh vertex data of each frame is extracted from the Animator and SkinnedMeshRenderer in the GenerateSkinnedAnimationForGPUBuffer() function, and then the data is stored in the GPU's ComputeBuffer for use in instanced rendering.

GetCurrentAnimatorStateInfo to obtain the state information of the current animation layer for subsequent precise control of animation playback.

numOfFrames is determined using the power of two that is closest to the product of the animation length and the frame rate, which can optimize GPU memory access.

Then create a ComputeBuffer to store all vertex data for all frames. vertexAnimationBuffer

In the for loop, bake all animation frames. Specifically, play and update immediately at each sampleTime point, then bake the mesh of the current animation frame into bakedMesh. And extract the newly baked Mesh vertices, update them into the array vertexAnimationData, and finally upload them to the GPU to end.

// ...continued from above boidSMR = boidObject.GetComponentInChildren (); boidMesh = boidSMR.sharedMesh; animator = boidObject.GetComponentInChildren (); int iLayer = 0; AnimatorStateInfo aniStateInfo = animator.GetCurrentAnimatorStateInfo(iLayer); Mesh bakedMesh = new Mesh(); float sampleTime = 0; float perFrameTime = 0; numOfFrames = Mathf.ClosestPowerOfTwo((int)(animationClip.frameRate * animationClip.length)); perFrameTime = animationClip.length / numOfFrames; var vertexCount = boidSMR.sharedMesh.vertexCount; vertexAnimationBuffer = new ComputeBuffer(vertexCount * numOfFrames, 16); Vector4[] vertexAnimationData = new Vector4[vertexCount * numOfFrames]; for (int i = 0; i < numOfFrames; i++) { animator.Play(aniStateInfo.shortNameHash, iLayer, sampleTime); animator.Update(0f); boidSMR.BakeMesh(bakedMesh); for(int j = 0; j < vertexCount; j++) { Vector4 vertex = bakedMesh.vertices[j]; vertex.w = 1; vertexAnimationData[(j * numOfFrames) + i] = vertex; } sampleTime += perFrameTime; } vertexAnimationBuffer.SetData(vertexAnimationData); boidMaterial.SetBuffer("vertexAnimation", vertexAnimationBuffer); boidObject.SetActive(false);In the Compute Shader, maintain each frame variable stored in an individual data structure.

boid.frame = boid.frame + velocity * deltaTime * boidFrameSpeed; if (boid.frame >= numOfFrames) boid.frame -= numOfFrames;Lerp different frames of animation in Shader. The left side is without frame interpolation, and the right side is after interpolation. The effect is very significant.

A good title can get more recommendations and followers

void vert(inout appdata_custom v) { #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED #ifdef FRAME_INTERPOLATION v.vertex = lerp(vertexAnimation[v.id * numOfFrames + _CurrentFrame], vertexAnimation[v.id * numOfFrames + _NextFrame], _FrameInterpolation); #else v.vertex = vertexAnimation[v.id * numOfFrames + _CurrentFrame]; #endif v.vertex = mul(_Matrix, v.vertex); #endif } void setup() { #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED _Matrix = create_matrix(boidsBuffer[unity_InstanceID].position, boidsBuffer[unity_InstanceID].direction, float3(0.0, 1.0, 0.0)); _CurrentFrame = boidsBuffer[unity_InstanceID].frame; #ifdef FRAME_INTERPOLATION _NextFrame = _CurrentFrame + 1; if (_NextFrame >= numOfFrames) _NextFrame = 0; _FrameInterpolation = frac(boidsBuffer[unity_InstanceID].frame); #endif #endif }It was not easy, but it is finally complete.

Complete project link: https://github.com/Remyuu/Unity-Compute-Shader-Learn/tree/L4_Skinned/Assets/Scripts

8. Summary/Quiz

When rendering points which gives the best answer?

What are the three key steps in flocking?

When creating an arguments buffer for DrawMeshInstancedIndirect, how many uints are required?

We created the wing flapping by using a skinned mesh shader. True or False.

In a shader used by DrawMeshInstancedIndirect, which variable name gives the correct index for the instance?

References

- https://en.wikipedia.org/wiki/Boids

- Flocks, Herds, and Schools: A Distributed Behavioral Model

Leave a Reply