Project address:

https://github.com/Remyuu/Unity-Compute-Shader-Learngithub.com/Remyuu/Unity-Compute-Shader-Learn

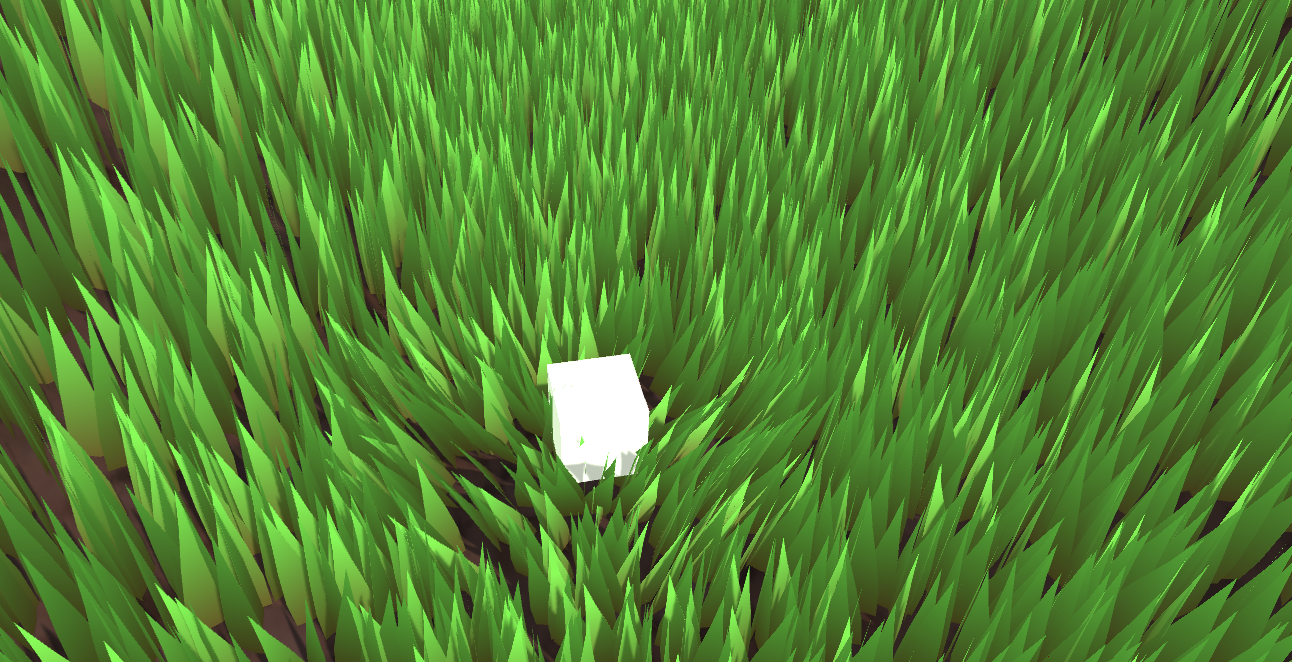

L5 Grass Rendering

The current effect is very ugly, and there are still many details that are not perfect, it is just "implemented". Since I am also a rookie, I hope you can correct me if I write/do it poorly.

Summary of knowledge points:

- Grass Rendering Solution

- UNITY_PROCEDURAL_INSTANCING_ENABLED

- bounds.extents

- X-ray detection

- Rodrigo Spin

- Quaternion rotation

Preface 1

Preface Reference Articles:

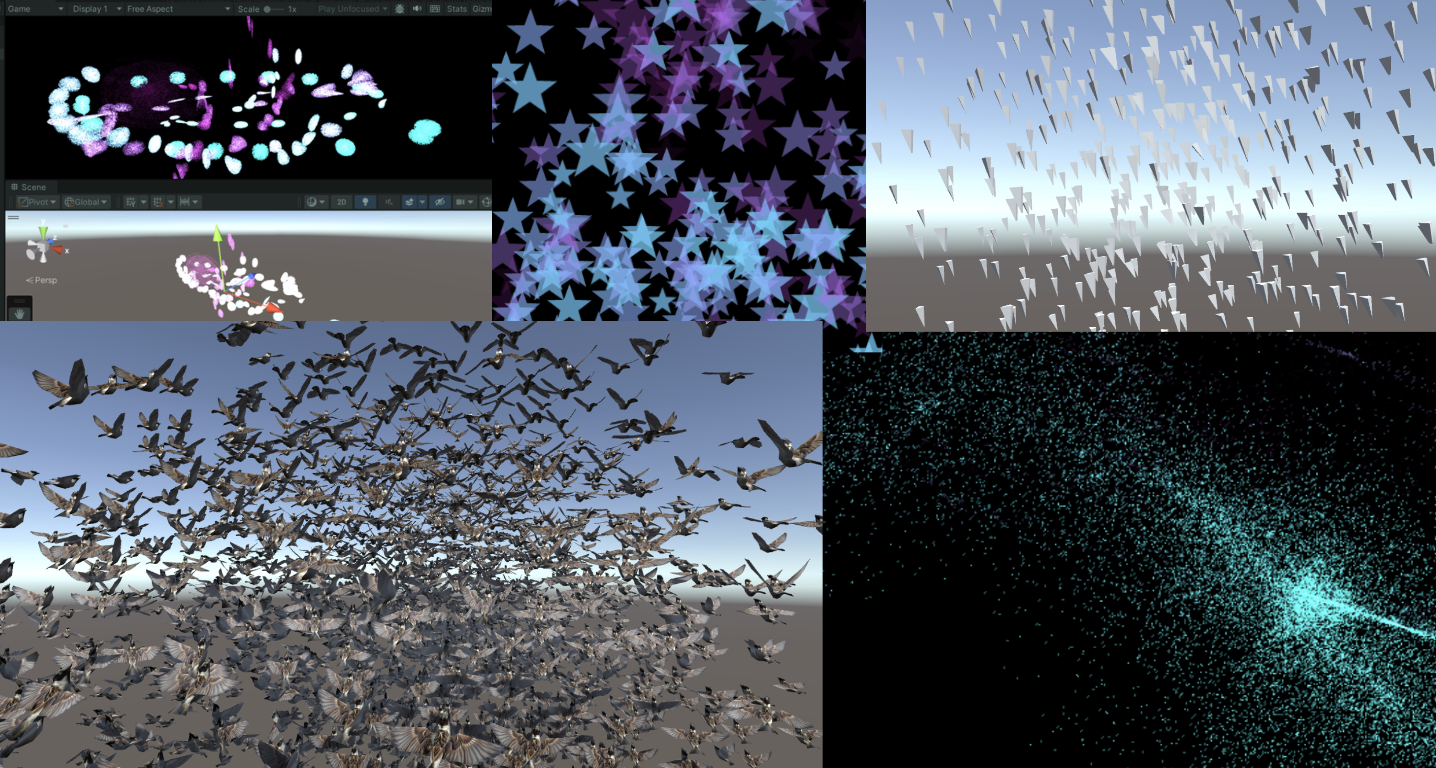

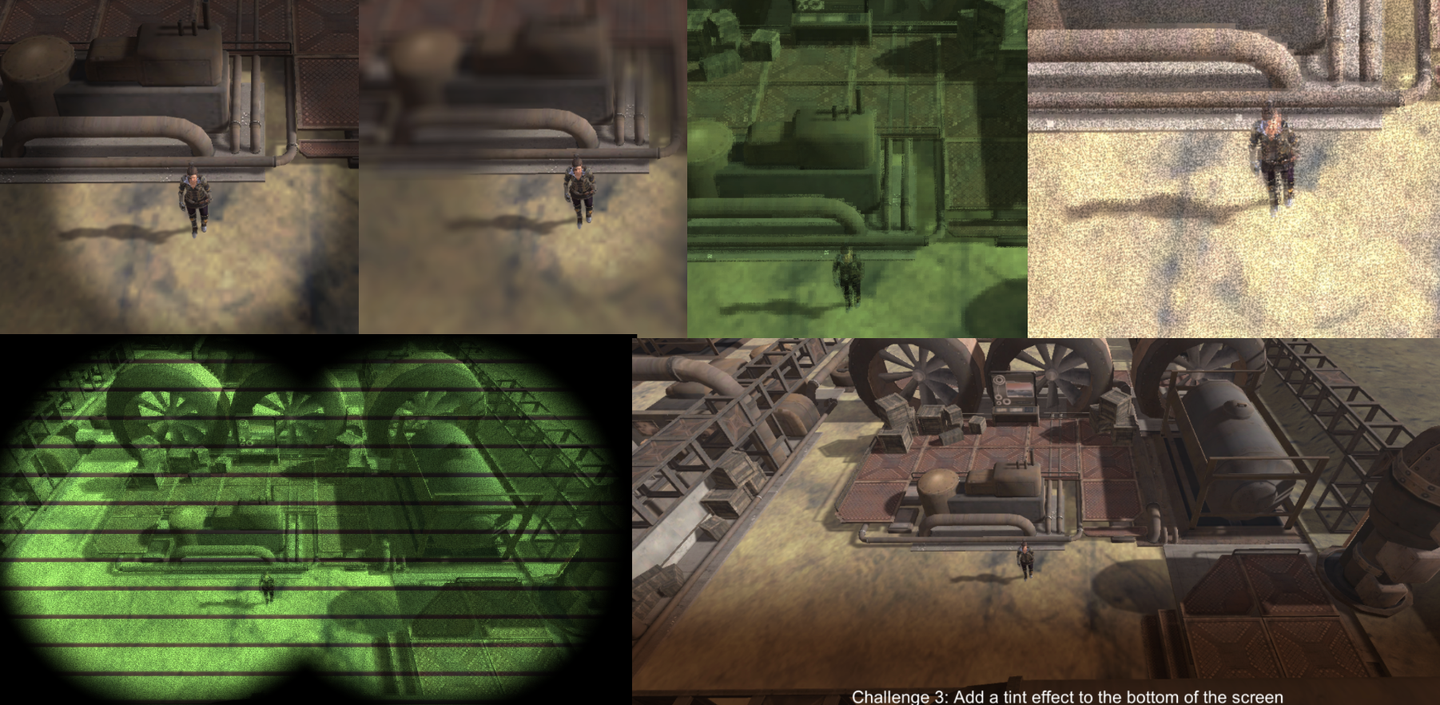

There are many ways to render grass.

The simplest way is to directly paste a grass texture on it.

In addition, eachMesh GrassIt is also common to drag it into the scene. This method has a large operating space and every blade of grass is under control. Although you can use Batching and other methods to optimize and reduce the transmission time from CPU to GPU, this will consume the life of the Ctrl, C, V and D keys on your keyboard. However, you can use L(a, b) in the Transform component to evenly distribute the selected objects between a and b. If you want randomness, you can use R(a, b). For more related operations, seeOfficial Documentation.

Can also be combinedGeometry shaders and tessellation shadersThis method looks good, but one shader can only correspond to one type of geometry (grass). If you want to generate flowers or rocks on this mesh, you need to modify the code in the geometry shader. This problem is not the most critical. The more serious problem is that many mobile devices and Metal do not support geometry shaders at all. Even if they do, they are only software-simulated, with poor performance. And the grass mesh will be recalculated every frame, wasting performance.

BillboardTechnical rendering of grass is also a widely used and long-lasting method. This method works very well when we don't need high-fidelity images. This method is to simply render a Quad+map (Alpha clipping). Use DrawProcedural. However, this method can only be viewed from a distance and not up close, otherwise it will be exposed.

Using UnityTerrain SystemYou can also draw very nice grass. And Unity uses instancing technology to ensure performance. The best part is its brush tool, but if your workflow does not include the terrain system, you can also use third-party plugins to do it.

When searching for information, I also found aImpostors. It's quite interesting to combine the vertex saving advantage of billboards with the ability to realistically reproduce objects from multiple angles. This technology "takes" a Mesh photo of real grass from multiple angles in advance and stores it through Texture. At runtime, the appropriate texture is selected for rendering according to the viewing direction of the current camera. It is equivalent to an upgraded version of the billboard technology. I think the Impostors technology is very suitable for objects that are large but players may need to view from multiple angles, such as trees or complex buildings. However, this method may have problems when the camera is very close or changes between two angles. A more reasonable solution is: use a mesh-based method at very close distances, use Impostors at medium distances, and use billboards at long distances.

The method to be implemented in this article is based on GPU Instancing, which should be called "per-blade mesh grass". This solution is used in games such as "Ghost of Tsushima", "Genshin Impact" and "The Legend of Zelda: Breath of the Wild". Each grass has its own entity, and the light and shadow effects are quite realistic.

Rendering process:

Preface 2

Unity's Instancing technology is quite complex, and I have only seen a glimpse of it. Please correct me if I find any mistakes. The current code is written according to the documentation. GPU instancing currently supports the following platforms:

- Windows: DX11 and DX12 with SM 4.0 and above / OpenGL 4.1 and above

- OS X and Linux: OpenGL 4.1 and above

- Mobile: OpenGL ES 3.0 and above / Metal

- PlayStation 4

- Xbox One

In addition, Graphics.DrawMeshInstancedIndirect has been eliminated. You should use Graphics.RenderMeshIndirect. This function will automatically calculate the Bounding Box. This is a later story. For details, please see the official documentation:RenderMeshIndirect . This article was also helpful:

https://zhuanlan.zhihu.com/p/403885438.

The principle of GPU Instancing is to send a Draw Call to multiple objects with the same Mesh. The CPU first collects all the information, then puts it into an array and sends it to the GPU at once. The limitation is that the Material and Mesh of these objects must be the same. This is the principle of being able to draw so much grass at a time while maintaining high performance. To achieve GPU Instancing to draw millions of Meshes, you need to follow some rules:

- All meshes need to use the same Material

- Check GPU Instancing

- Shader needs to support instancing

- Skin Mesh Renderer is not supported

Since Skin Mesh Renderer is not supported,In the previous articleWe bypassed SMR and directly took out the Mesh of different key frames and passed it to the GPU. This is also the reason why the question was raised at the end of the previous article.

There are two main types of Instancing in Unity: GPU Instancing and Procedural Instancing (involving Compute Shaders and Indirect Drawing technology), and the other is the stereo rendering path (UNITY_STEREO_INSTANCING_ENABLED), which I won't go into here. In Shader, the former uses #pragma multi_compile_instancing and the latter uses #pragma instancing_options procedural:setup. For details, please see the official documentationCreating shaders that support GPU instancing .

Then currently the SRP pipeline does not support custom GPU Instancing Shaders, only BIRP can.

Then there is UNITY_PROCEDURAL_INSTANCING_ENABLED . This macro is used to indicate whether Procedural Instancing is enabled. When using Compute Shader or Indirect Drawing API, the attributes of the instance (such as position, color, etc.) can be calculated in real time on the GPU and used directly for rendering without CPU intervention.In the source code, the core code of this macro is:

#ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED #ifndef UNITY_INSTANCING_PROCEDURAL_FUNC #error "UNITY_INSTANCING_PROCEDURAL_FUNC must be defined." #else void UNITY_INSTANCING_PROCEDURAL_FUNC(); // Forward declaration of programmatic function #define DEFAULT_UNITY_SETUP_INSTANCE_ID(input) { UnitySetupInstanceID(UNITY_GET_INSTANCE_ID(input)); UNITY_INSTANCING_PROCEDURAL_FUNC();} #endif #else #define DEFAULT_UNITY_SETUP_INSTANCE_ID(input) { UnitySetupInstanceID(UNITY_GET_INSTANCE_ID(input));} #endifThe Shader is required to define a UNITY_INSTANCING_PROCEDURAL_FUNC function, which is actually the setup() function. If there is no setup() function, an error will be reported.

Generally speaking, what the setup() function needs to do is to extract the corresponding (unity_InstanceID) data from the Buffer, and then calculate the current instance's position, transformation matrix, color, metalness, or custom data and other attributes.

GPU Instancing is just one of Unity's many optimization methods, and you still need to continue learning.

1. Swaying 3-Quad Grass

All the CS knowledge points used in this chapter have been covered in the previous article, but the background is changed. Draw a simple diagram.

The implementation is to use GPU Instancing, that is, rendering a large mesh at one time. The core code is just one sentence:

Graphics.DrawMeshInstancedIndirect(mesh, 0, material, bounds, argsBuffer);The Mesh is composed of three Quads and a total of six triangles.

Then add a texture + Alpha Test.

The data structure of grass:

- Location

- Tilt Angle

- Random noise value (used to calculate random tilt angles)

public Vector3 position; // World coordinates, need to be calculated public float lean; public float noise; public GrassClump( Vector3 pos){ position.x = pos.x; position.y = pos.y; position.z = pos.z; lean = 0; noise = Random.Range(0.5f, 1); if (Random.value < 0.5f) noise = -noise; }Pass the buffer of the grass to be rendered (the world coordinates need to be calculated) to the GPU. First determine where the grass is generated and how much is generated. Get the AABB of the current object's Mesh (assuming it is a Plane Mesh for now).

Bounds bounds = mf.sharedMesh.bounds; Vector3 clumps = bounds.extents;

Determine the extent of the grass, then randomly generate grass on the xOz plane.

Add a caption for the image, no more than 140 characters (optional)

It should be noted that we are still in object space, so we need to convert Object Space to World Space.

pos = transform.TransformPoint(pos);Combined with the density parameter and the object scaling factor, calculate how many grasses to render in total.

Vector3 vec = transform.localScale / 0.1f * density; clumps.x *= vec.x; clumps.z *= vec.z; int total = (int)clumps.x * (int)clumps.z;Since the logic of Compute Shader is that each thread calculates a blade of grass, it is very likely that the number of blades of grass that need to be rendered is not a multiple of threads. Therefore, the number of blades of grass that need to be rendered is rounded up to a multiple of threads. In other words, when the density factor = 1, the number of blades of grass rendered is equal to the number of threads in a thread group.

groupSize = Mathf.CeilToInt((float)total / (float)threadGroupSize); int count = groupSize * (int)threadGroupSize;Let the Compute Shader calculate the tilt angle of each grass.

GrassClump clump = clumpsBuffer[id.x]; clump.lean = sin(time) * maxLean * clump.noise; clumpsBuffer[id.x] = clump;Passing the grass position and rotation angle to the GPU Buffer is not the end. The Material must decide the final appearance of the rendered instance before Graphics.DrawMeshInstancedIndirect can be executed.

In the rendering process, before the instantiation phase (that is, in the procedural:setup function), use unity_InstanceID to determine which grass is currently being rendered. Get the current grass's world space and the grass's dump value.

GrassClump clump = clumpsBuffer[unity_InstanceID]; _Position = clump.position; _Matrix = create_matrix(clump.position, clump.lean);Specific rotation + displacement matrix:

float4x4 create_matrix(float3 pos, float theta){ float c = cos(theta); // Calculate the cosine of the rotation angle float s = sin(theta); // Calculate the sine of the rotation angle // Return a 4x4 transformation matrix return float4x4( c, -s, 0, pos.x, // First row: X-axis rotation and translation s, c, 0, pos.y, // Second row: Y-axis rotation (enough for 2D, but may not be used for grass) 0, 0, 1, pos.z, // Third row: Z axis unchanged 0, 0, 0, 1 // Fourth row: uniform coordinates (remain unchanged) ); }How is this formula derived? Substitute (0,0,1) into the Rodriguez formula to get a rotation matrix, and then expand it to the barycentric coordinates. Substitute it into the code formula.

Multiply this matrix by the vertices of Object Space to get the vertex coordinates of the dumped + displaced vertex.

v.vertex.xyz *= _Scale; float4 rotatedVertex = mul(_Matrix, v.vertex); v.vertex = rotatedVertex;Now comes the problem. Currently the grass is not a plane, but a three-dimensional figure composed of three groups of Quads.

If you simply rotate all vertices along the z-axis, the grass roots will be greatly offset.

Therefore, we use v.texcoord.y to lerp the vertex positions before and after the rotation. In this way, the higher the Y value of the texture coordinate (that is, the closer the vertex is to the top of the model), the greater the rotation effect on the vertex. Since the Y value of the grass root is 0, the grass root will not shake after lerp.

v.vertex.xyz *= _Scale; float4 rotatedVertex = mul(_Matrix, v.vertex); // v.vertex = rotatedVertex; v.vertex.xyz += _Position; v.vertex = lerp(v.vertex, rotatedVertex, v.texcoord.y);The effect is very poor, the grass is too fake. This kind of Quad grass can only be used from a distance.

- Swinging stiffness

- Stiff leaves

- Poor lighting effects

Current version code:

- script:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_3QuadGrass/Assets/Scripts/GrassClumps.cs

- Shader:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_3QuadGrass/Assets/Shaders/GrassClumps.shader

- Compute Shader:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_3QuadGrass/Assets/Shaders/GrassClumps.compute

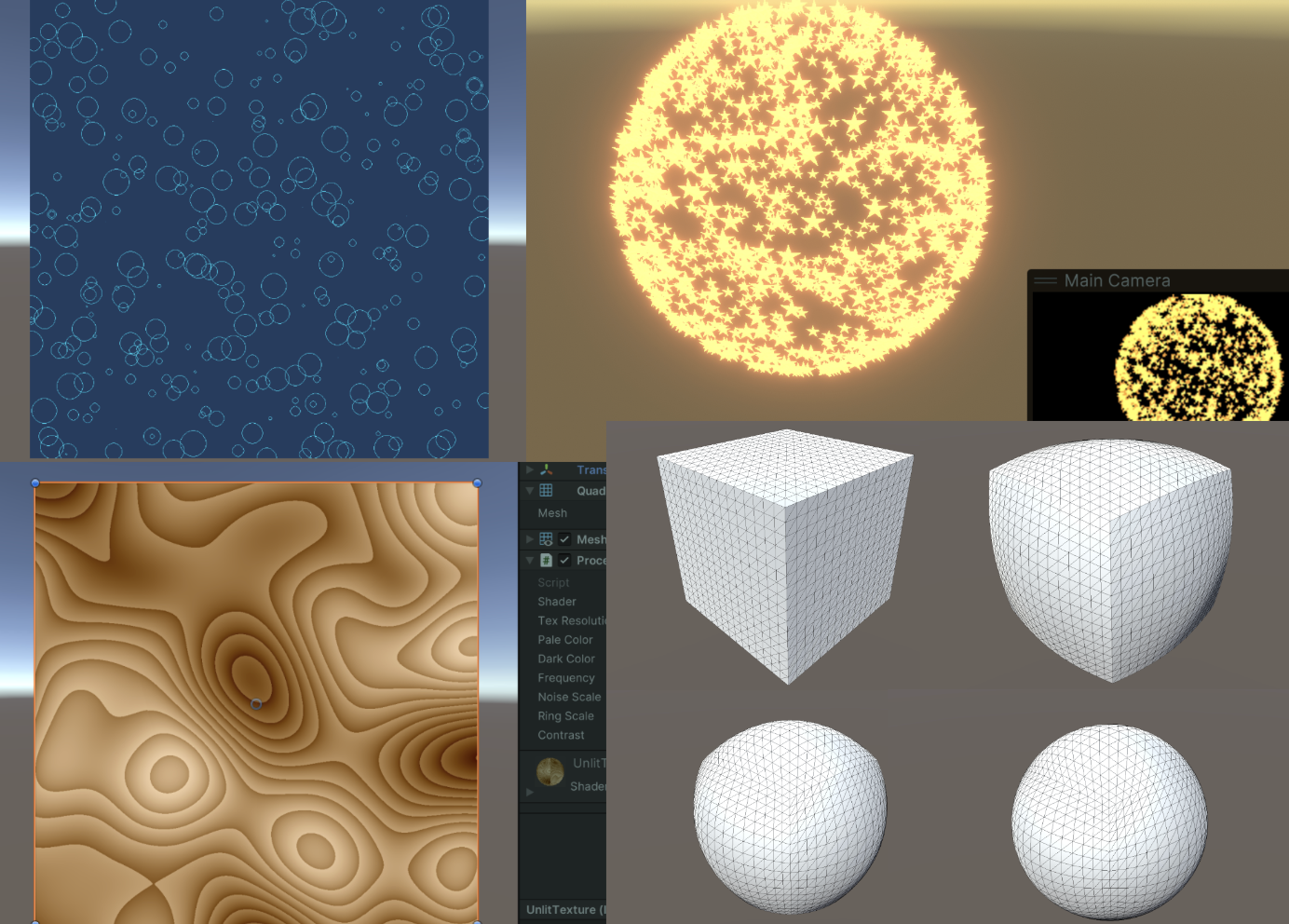

2. Stylized Grass

In the previous section, I used several Quads and grass with alpha maps, and used sin waves for disturbance, but the effect was very average. Now I will use stylized grass and Perlin noise to improve it.

Define the grass' vertices, normals and UVs in C# and pass them to the GPU as a Mesh.

Vector3[] vertices = { new Vector3(-halfWidth, 0, 0), new Vector3( halfWidth, 0, 0), new Vector3(-halfWidth, rowHeight, 0), new Vector3( halfWidth, rowHeight, 0), new Vector3 (-halfWidth*0.9f, rowHeight*2, 0), new Vector3( halfWidth*0.9f, rowHeight*2, 0), new Vector3(-halfWidth*0.8f, rowHeight*3, 0), new Vector3( halfWidth*0.8f, rowHeight*3, 0), new Vector3( 0, rowHeight*4, 0) } ; Vector3 normal = new Vector3(0, 0, -1); Vector3[] normals = { normal, normal, normal, normal, normal, normal, normal, normal, normal }; Vector2[] uvs = { new Vector2(0,0), new Vector2(1,0), new Vector2(0,0.25f), new Vector2(1,0.25f), new Vector2(0,0.5f), new Vector2(1,0.5f) , new Vector2(0,0.75f), new Vector2(1,0.75f), new Vector2(0.5f,1) };Unity's Mesh also has a vertex order that needs to be set. The default isCounterclockwiseIf you write clockwise and enable backface culling, you won't see anything.

int[] indices = { 0,1,2,1,3,2,//row 1 2,3,4,3,5,4,//row 2 4,5,6,5,7,6, //row 3 6,7,8//row 4 }; mesh.SetIndices(indices, MeshTopology.Triangles, 0);The wind direction, size and noise ratio are set in the code, packed into a float4, and passed to the Compute Shader to calculate the swinging direction of a blade of grass.

Vector4 wind = new Vector4(Mathf.Cos(theta), Mathf.Sin(theta), windSpeed, windScale);A blade of grass data structure

struct GrassBlade { public Vector3 position; public float bend; // Random grass blade dumping public float noise; // CS calculates noise value public float fade; // Random grass blade brightness public float face; // Blade facing public GrassBlade( Vector3 pos) { position.x = pos.x; position.y = pos.y; position.z = pos.z; bend = 0; noise = Random.Range(0.5f, 1) * 2 - 1; fade = Random.Range(0.5f, 1); face = Random.Range(0, Mathf.PI); } }Currently, the grass blades are all oriented in the same direction. In the Setup function, first change the blade orientation.

// Create a rotation matrix around the Y axis (facing) float4x4 rotationMatrixY = AngleAxis4x4(blade.position, blade.face, float3(0,1,0));

The logic of tipping the grass blades (since AngleAxis4x4 includes displacement, the following figure only demonstrates the tipping of the blades without random orientation. If you want to get the effect shown in the figure below, remember to add displacement to the code):

// Create a rotation matrix around the X axis (dump) float4x4 rotationMatrixX = AngleAxis4x4(float3(0,0,0), blade.bend, float3(1,0,0));

Then combine the two rotation matrices.

_Matrix = mul(rotationMatrixY, rotationMatrixX);

The lighting is now very strange because the normals are not modified.

// Calculate the inverse transpose matrix for normal transformation float3x3 normalMatrix = (float3x3)transpose(((float3x3)_Matrix)); // Transform normal v.normal = mul(normalMatrix, v.normal);Here is the code for the inverse matrix:

float3x3 transpose(float3x3 m) { return float3x3( float3(m[0][0], m[1][0], m[2][0]), // Column 1 float3(m[0][1] , m[1][1], m[2][1]), // Column 2 float3(m[0][2], m[1][2], m[2][2]) // Column 3 ); }For code readability, add the homogeneous coordinate transformation matrix, which is upgraded to the famous rotation formula:

float4x4 AngleAxis4x4(float3 pos, float angle, float3 axis){ float c, s; sincos(angle*2*3.14, s, c); float t = 1 - c; float x = axis.x; float y = axis. y; float z = axis.z; return float4x4( t * x * x + c , t * x * y - s * z, t * x * z + s * y, pos.x, t * x * y + s * z, t * y * y + c , t * y * z - s * x, pos.y, t * x * z - s * y, t * y * z + s * x, t * z * z + c , pos.z, 0,0,0,1 ); }

What if you want to spawn on uneven ground?

You only need to modify the logic of generating the initial height of the grass, and use MeshCollider and ray detection.

bladesArray = new GrassBlade[count]; gameObject.AddComponent (); RaycastHit hit; Vector3 v = new Vector3(); Debug.Log(bounds.center.y + bounds.extents.y); vy = (bounds.center.y + bounds.extents.y); v = transform .TransformPoint(v); float heightWS = vy + 0.01f; // Floating point error v.Set(0, 0, 0); vy = (bounds.center.y - bounds.extents.y); v = transform.TransformPoint(v); float neHeightWS = vy; float range = heightWS - neHeightWS; // heightWS += 10; // Increase the error slightly and adjust it yourself int index = 0; int loopCount = 0; while (index < count && loopCount < (count * 10)) { loopCount++; Vector3 pos = new Vector3( Random.value * bounds.extents.x * 2 - bounds.extents.x + bounds.center.x, 0, Random.value * bounds.extents.z * 2 - bounds.extents.z + bounds.center.z); pos = transform.TransformPoint(pos); pos.y = heightWS; if ( Physics.Raycast(pos, Vector3.down, out hit)) { pos.y = hit.point.y; GrassBlade blade = new GrassBlade(pos); bladesArray[index++] = blade; } }Here, rays are used to detect the position of each grass and calculate its correct height.

You can also adjust it so that the higher the altitude, the sparser the grass.

As shown above, calculate the ratio of the two green arrows. The higher the altitude, the lower the probability of generation.

float deltaHeight = (pos.y - neHeightWS) / range; if (Random.value > deltaHeight) { // Grass }

Current code link:

- CSharp:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_PG/Assets/Scripts/GrassBlades.cs

- Shader:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_PG/Assets/Shaders/GrassBlades.shader

- Compute Shader:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_PG/Assets/Shaders/GrassBlades.compute

Now there is no problem with lighting or shadow.

3. Interactive Grass

In the previous section, we first rotated the direction of the grass and then changed the tilt of the grass. Now we need to add another rotation. When an object approaches the grass, the grass will fall in the opposite direction of the object. This means another rotation. This rotation is not easy to set, so it is changed to quaternion. The calculation of quaternion is performed in Compute Shader. The quaternion is also passed to the material and stored in the structure of the grass piece. Finally, in the vertex shader, the quaternion is converted back to an affine matrix to apply the rotation.

Here we add random width and height of grass. Because each grass mesh is the same, we can't modify the height of grass by modifying the mesh. So we can only do vertex offset in Vert.

// C# [Range(0,0.5f)] public float width = 0.2f; [Range(0,1f)] public float rd_width = 0.1f; [Range(0,2)] public float height = 1f; [Range (0,1f)] public float rd_height = 0.2f; GrassBlade blade = new GrassBlade(pos); blade.height = Random.Range(-rd_height, rd_height); blade.width = Random.Range(-rd_width, rd_width); bladesArray[index++] = blade; // Setup starts with GrassBlade blade = bladesBuffer[unity_InstanceID]; _HeightOffset = blade.height_offset; _WidthOffset = blade.width_offset; // Vert starts with float tempHeight = v.vertex.y * _HeightOffset; float tempWidth = v.vertex.x * _WidthOffset; v.vertex.y += tempHeight; v.vertex.x += tempWidth;To sort it out, the current grass Buffer stores:

struct GrassBlade{ public Vector3 position; // World position - need to be initialized public float height; // Grass height offset - need to be initialized public float width; // Grass width offset - need to be initialized public float dir; // Blade orientation - need to be initialized public float fade; // Random grass blade shading - need to be initialized public Quaternion quaternion; // Rotation parameters - CS calculation->Vert public float padding; public GrassBlade( Vector3 pos){ position.x = pos.x; position.y = pos.y; position.z = pos.z; height = width = 0; dir = Random.Range(0, 180); fade = Random.Range(0.99f, 1); quaternion = Quaternion.identity; padding = 0; } } int SIZE_GRASS_BLADE = 12 * sizeof(float);The quaternion q used to represent the rotation from vector v1 to vector v2 is:

float4 MapVector(float3 v1, float3 v2){ v1 = normalize(v1); v2 = normalize(v2); float3 v = v1+v2; v = normalize(v); float4 q = 0; qw = dot(v, v2 ); q.xyz = cross(v, v2); return q; }To combine two rotational quaternions, you need to use multiplication (note the order).

Suppose there are two quaternions and . The formula for calculating their product is:

where are the real and imaginary components of , and are the real and imaginary components of .

float4 quatMultiply(float4 q1, float4 q2) { // q1 = a + bi + cj + dk // q2 = x + yi + zj + wk // Result = q1 * q2 return float4( q1.w * q2.x + q1.x * q2.w + q1.y * q2.z - q1.z * q2.y, // z + q1.x * q2.y - q1.y * q2.x + q1.z * q2.w, // Z component q1.w * q2.w - q1.x * q2.x - q1.y * q2.y - q1.z * q2.z // W (real) component ); }To determine where the grass should fall, you need to get the Pos of the interactive object trampler, that is, its Transform component. And each frame is passed to the GPU Buffer through SetVector for use by the Compute Shader, so the GPU memory address is stored as an ID and does not need to be accessed with a string every time. It is also necessary to determine the range of the grass to fall and how to transition between falling and not falling, and pass a trampleRadius to the GPU. Since this is a constant, it does not need to be modified every frame, so it can be directly set with a string.

// CSharp public Transform trampler; [Range(0.1f,5f)] public float trampleRadius = 3f; ... Init(){ shader.SetFloat("trampleRadius", trampleRadius); tramplePosID = Shader.PropertyToID("tramplePos") ; } Update(){ shader.SetVector(tramplePosID, pos); }In this section, all rotation operations are thrown into the Compute Shader and calculated at once, and a quaternion is directly returned to the material. First, q1 calculates the quaternion of the random orientation, q2 calculates the random dump, and qt calculates the interactive dump. Here you can open an interactive coefficient in the Inspector.

[numthreads(THREADGROUPSIZE,1,1)] void BendGrass (uint3 id : SV_DispatchThreadID) { GrassBlade blade = bladesBuffer[id.x]; float3 relativePosition = blade.position - tramplePos.xyz; float dist = length(relativePosition); float4 qt ; if (distThen the method of converting quaternion to rotation matrix is:

float4x4 quaternion_to_matrix(float4 quat) { float4x4 m = float4x4(float4(0, 0, 0, 0), float4(0, 0, 0, 0), float4(0, 0, 0, 0), float4(0, 0 , 0, 0)); float x = quat.x, y = quat.y, z = quat.z, w = quat.w; float x2 = x + x, y2 = y + y, z2 = z + z; float xx = x * x2, xy = x * y2, xz = x * z2; float yy = y * y2, yz = y * z2, zz = z * z2; float wx = w * x2, wy = w * y2, wz = w * z2; m[0][0] = 1.0 - (yy + zz); m[0][1] = xy - wz; m[0][2] = xz + wy; m[1][0] = xy + wz; m[1][1] = 1.0 - (xx + zz); m[1][2] = yz - wx; m[2][0] = xz - wy; m[2][1] = yz + wx; m[2][2] = 1.0 - (xx + yy); m[0][3] = _Position.x; m[1][3] = _Position.y; m[2][3] = _Position. z; m[3][3] = 1.0; return m; }Then apply it.

void vert(inout appdata_full v, out Input data) { UNITY_INITIALIZE_OUTPUT(Input, data); #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED float tempHeight = v.vertex.y * _HeightOffset; float tempWidth = v.vertex.x * _WidthOffset; v.vertex.y += tempHeight; v.vertex.x += tempWidth; // Apply model vertex transformation v.vertex = mul(_Matrix, v.vertex); v.vertex.xyz += _Position; // Calculate the inverse transpose matrix for normal transformation v.normal = mul((float3x3)transpose(_Matrix), v.normal); #endif } void setup() { #ifdef UNITY_PROCEDURAL_INSTANCING_ENABLED // Get Compute Shader calculation results GrassBlade blade = bladesBuffer[unity_InstanceID]; _HeightOffset = blade.height_offset; _WidthOffset = blade.width_offset; _Fade = blade.fade; // Set shading _Matrix = quaternion_to_matrix(blade.quaternion); // Set the final rotation matrix _Position = blade.position; // Set position #endif }

Current code link:

- CSharp:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_Finally/Assets/Scripts/GrassBlades.cs

- Shader:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_Finally/Assets/Shaders/GrassBlades.shader

- Compute Shader:https://github.com/Remyuu/Unity-Compute-Shader-Learn/blob/L5_Finally/Assets/Shaders/GrassBlades.compute

4. Summary/Quiz

How do you programmatically get the thread group sizes of a kernel?

When defining a Mesh in code, the number of normals must be the same as the number of vertex positions. True or false.